A Further Search About Icing on the Cake

A Further Search About Icing on the Cake

1.Introduction about Icing on the Cake

The paper named Icing on the Cake: An Easy and Quick Post-Learning Method You Can Try After Deep Learning (https://arxiv.org/abs/1807.06540)

The method named “Icing on the Cake” enhances the accuracies of image classification problems in deep learnings by an easy and quick way. (“Icing on the cake” in the paper is called for short “ICK”)

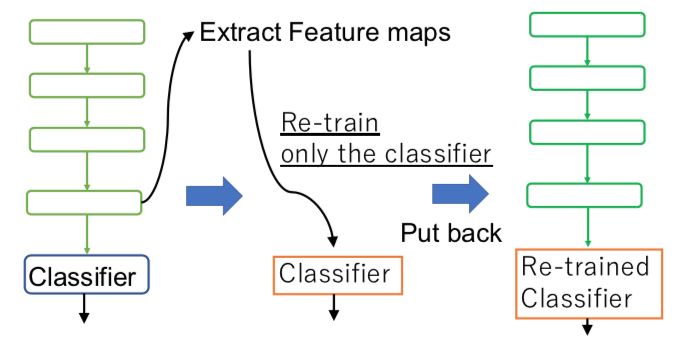

Here is the procedure below:

And authors remained the problem: the reason why ICK works.They did experiments in an effort to find a counter example. However, they could not. Rather, they collected more positive evidences at last.

Here is the procedure below:

Step-1: Train a deep neural network as usual.

Step-2: Extract features from the layer just before the final classifier as estimation.

Step-3: Train only the final layer again by the extracted features.

Step-4: Put the final classifier back to the original network.

It is not very rare that it takes a week or so to train deep neural networks. However, since “Icing on the Cake” is just to train the final layer only, it does not take time. It is done in about five minutes or so. It is really icing on the cake. We do not strongly insist that “Icing on the Cake” works all the cases. We cannot prove it for all the networks to hold as of now. The method is worth trying, and it does not hurt.

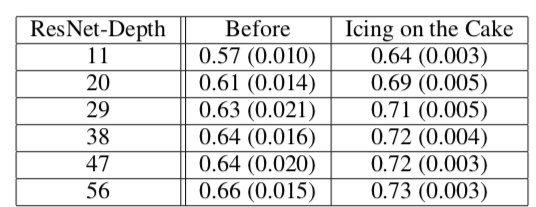

Here are some experiments showed below:

And authors remained the problem: the reason why ICK works.They did experiments in an effort to find a counter example. However, they could not. Rather, they collected more positive evidences at last.

2. Further research about ICK

1. Check the result of ICK

Because the author didn’t show more detail about ICK implement, we can’t check and use the method straightforwardly. So we try to re-implement the ICK according to the paper description.

The original “steps” are concise but not efficient, in this research we change some “steps”, but it is obviously both two kinds of “steps” are the same essentially.

To ensure the results convincing:

(1)Use the ResNet implement with high performance.

(2)Fine-tune the learning rate to reach the upper limit of the model.

(3)Save all the checkpoints to show the whole process.

I trained many times to find the proper learning rate.we use different learning rate according to the epochs, therefore we can see different stages of training.

Here are the experiment figure below:

(*)red lines mean different stages with different learning rate.blue curve means the BASE method.orange curve means the ICK method.

(1)Indeed, ICK is useful when the model is not converge enough.For example, in epoch 1~30, model will improve obviously using ICK, and in epoch 31~60, model will improve in a small amount.

(2)Unfortunately,ICK is not useful when the model has been converge. In epoch 61~120, the accuracy in ICK are even worse than BASE. Of course, the reason why the performance worse may is overfitting, but it still point out that: in this situation we can't easily improve the performance using ICK.

2. Make an explanation about ICK

1. comprehend the classification model

Nowadays, all of the classification models build like this:

[1]layers to extract features;

[2]layers to classification(most cases are fully connected layers);

usually, we change the layers to extract features, and hope the complicated structure and help us get more information from the data.

(1)According to the paper SVCCA(SVD+CCA) ,we can easily know that networks will converge “bottom to top”.When the training is not done, the “features extract” is not done. So, the classification layer cannot learn well from unfinished extract layers.

(*)Here is the experiment in SVCCA paper to show the "bottom to up" phenomenon.

2.Why ICK works like this

(1) Fully connected layer can not only do the classification but also extract features.Therefore, when use ICK method, the FC layer accept the unfinished features and do both extract work and classify work.

(2)When “extract features” is well enough, ICK don’t work.Because FC layer can’t get more information now.

In my opinion, you can’t truly improve the performance without changing your model structure.

we use the tool SVCCA to check the layers when the model training.Using this tool we can probably calculate the similarity between layers. And to check our idea, we want to show:

3.Summary

Some conclusions

1. ICK works in some situations

When the ICK works, process “extract features” is unfinished. Then the fully connected layer do two kinds of works: extract and classify.Therefore the performance improves.

2. ICK doesn’t work in some situations

When the ICK doesn’t work, process “extract features” is done. You can’t change the final layer like “initialize + retrain” to improve the performance.

The applications of ICK

1. Check your classification training

We can use ICK to check whether your network is well converge. When ICK works, it may means your network training is not good enough, you should consider train more epochs or change the learning rate.

2. Fast method to finish your network

Sometimes, we don’t need our network to reach “state of the art”,just need a common network to show the result. In this case, we can use ICK method to fast finish your unfinished network. In the example above(ResNet18,CIFAR100), we can reach 67% ACC in epoch 30!

评论

发表评论